Cosmic Rays

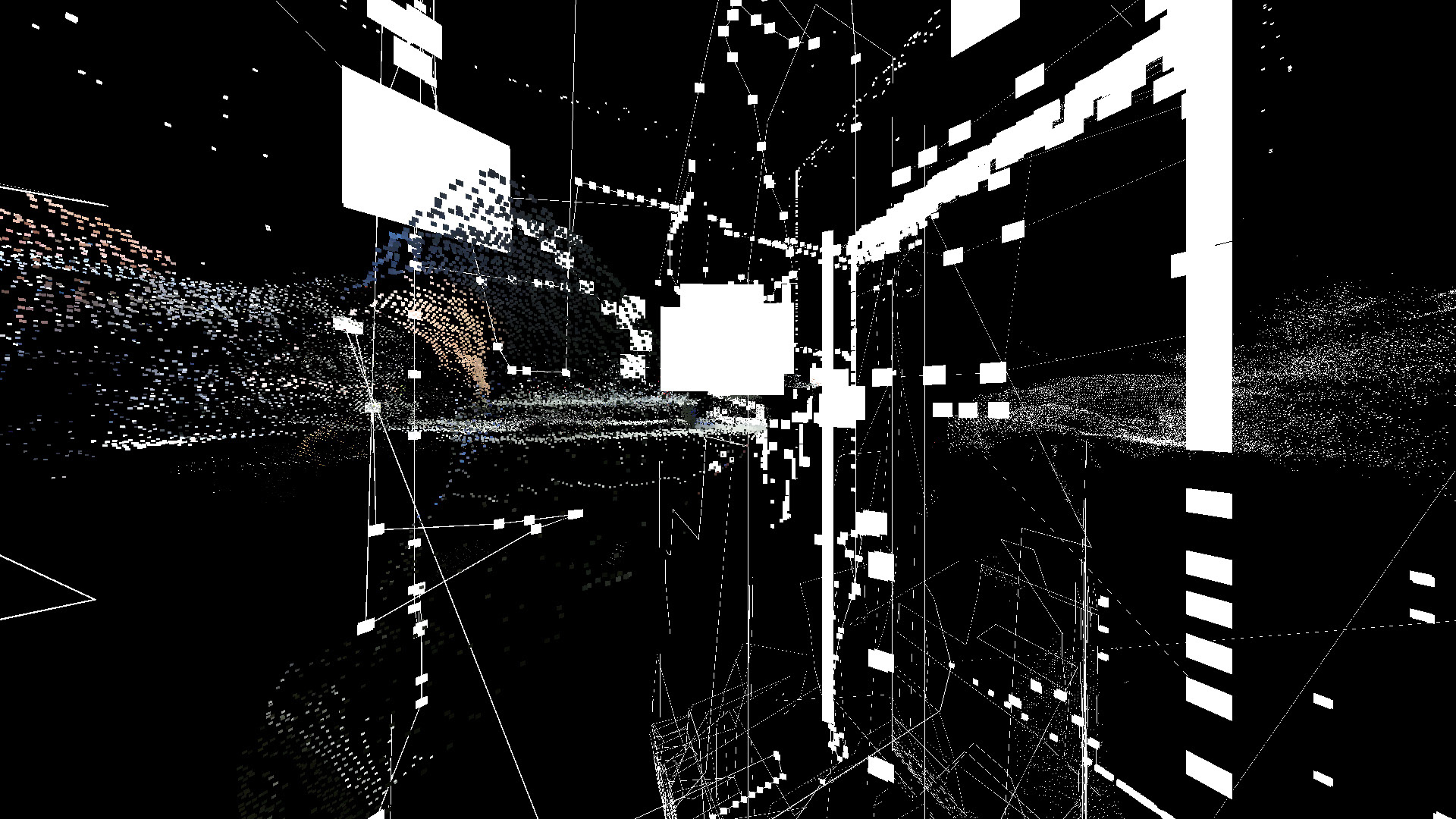

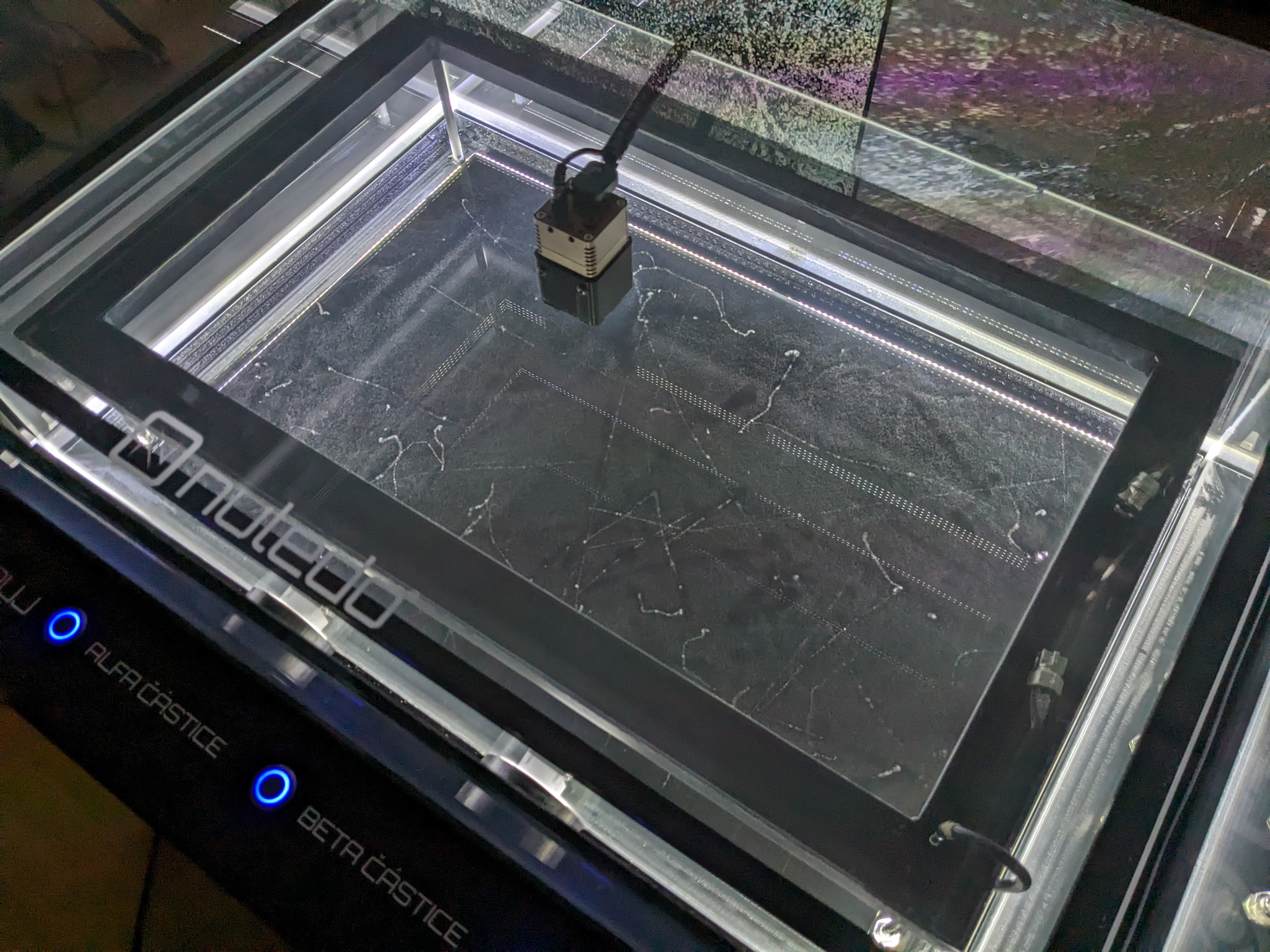

Cosmic Rays was an experiment that emerged from a two-day workshop at the Institute of Physics of the Czech Academy of Science in December 2023. The workshop focused on video signal processing using a broad range of hardware and software technologies. A single camera source, capturing images from a cloud chamber, was integrated into a complex audiovisual system. This chamber, a particle detector, displayed the trajectories of particles originating from outer space as well as those injected via button triggers in real time. The video signal was routed to multiple inputs.

The first input involved hardware-based processing through an FPGA unit, utilizing an edge detection algorithm encoded into its circuit. The second input was connected to a sonification synthesizer that converted the video signal into a real-time soundscape. This audio signal was then used in a third video input within a software-based image processing and manipulation application Resolume Arena. Here, real-time FFT audio analysis mapped the sound to control the ripple function of the live camera feed, creating a system that manipulated itself.

The fourth input fed into a 3D application that also utilized an Azure Kinect 3D depth camera aimed at the cloud chamber. Its 3D stream was interpreted as a particle system using Unity VFX Graph, with the main video signal serving as a live texture on these particles. In addition, audio analysis within Unity controlled the particle size. Finally, the original video was also displayed on a 2x4 video wall through another specialized hardware setup.

The workshop opened new avenues for experimental work with live video signals in the realm of science communication. Exploring the potential of live signals from cosmic particles was a unique and absolutely mind-bending experience.

Collaboration with Jan Zobal (Institute of Physics), Radek Leffner (Flar), Jiri Vyskocil (HZDR - CASUS)

Volumetric Capture for Science Communication

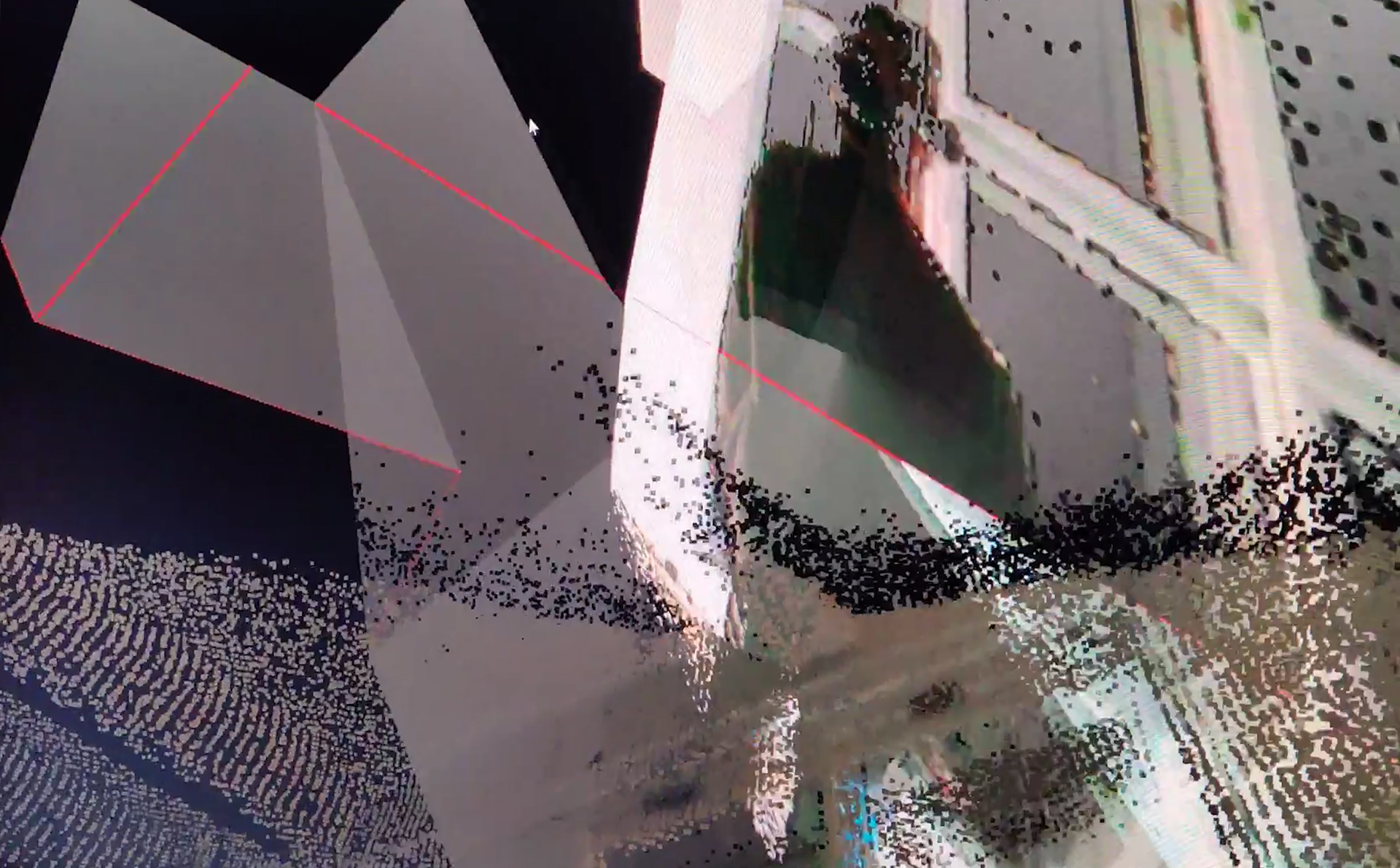

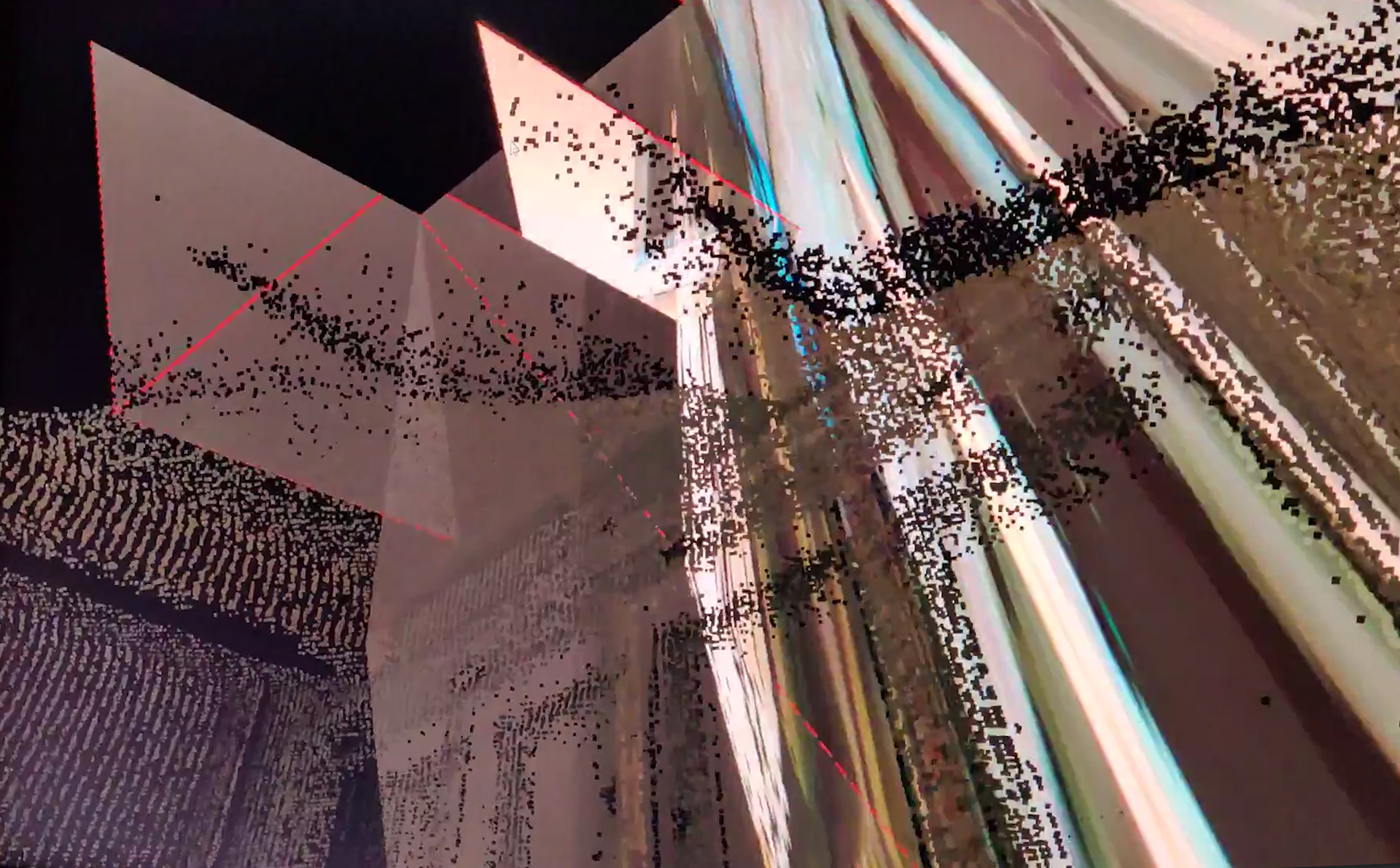

In 2022, I developed an experimental approach to integrate volumetric capture technology for science communication. This project involved creating a Hololens 2 AR application that featured a scaled-down 3D model of a complex scientific laser system. I invited scientists to provide spatial descriptions of these lasers in a unique 360-degree green-screen environment. This was achieved using three synchronized Azure Kinect sensors to capture volumetric sequences with the Brekel Point Cloud software.

After post-production and implementation in Unity 3D, VR users could approach the scientist in the scene. The playback of the recording would be triggered, allowing users to see and hear the spatial descriptions of the systems. Importantly, this included the scientists pointing to specific locations in the 3D model, which was positioned exactly where they saw its hologram in the Hololens 2 during the recording. Although this technology and setup had limitations in resolution and precision of calibration, which were addressed using both AI and manual adjustments, the concept and experimentation with it show potential for creatively bridging XR technologies with 4D scanning.

Collaboration with Jan Zobal and Dan Hasalik (Institute of Physics of the Czech Academy of Science), Radek Poboril (HiLase Laser Center)

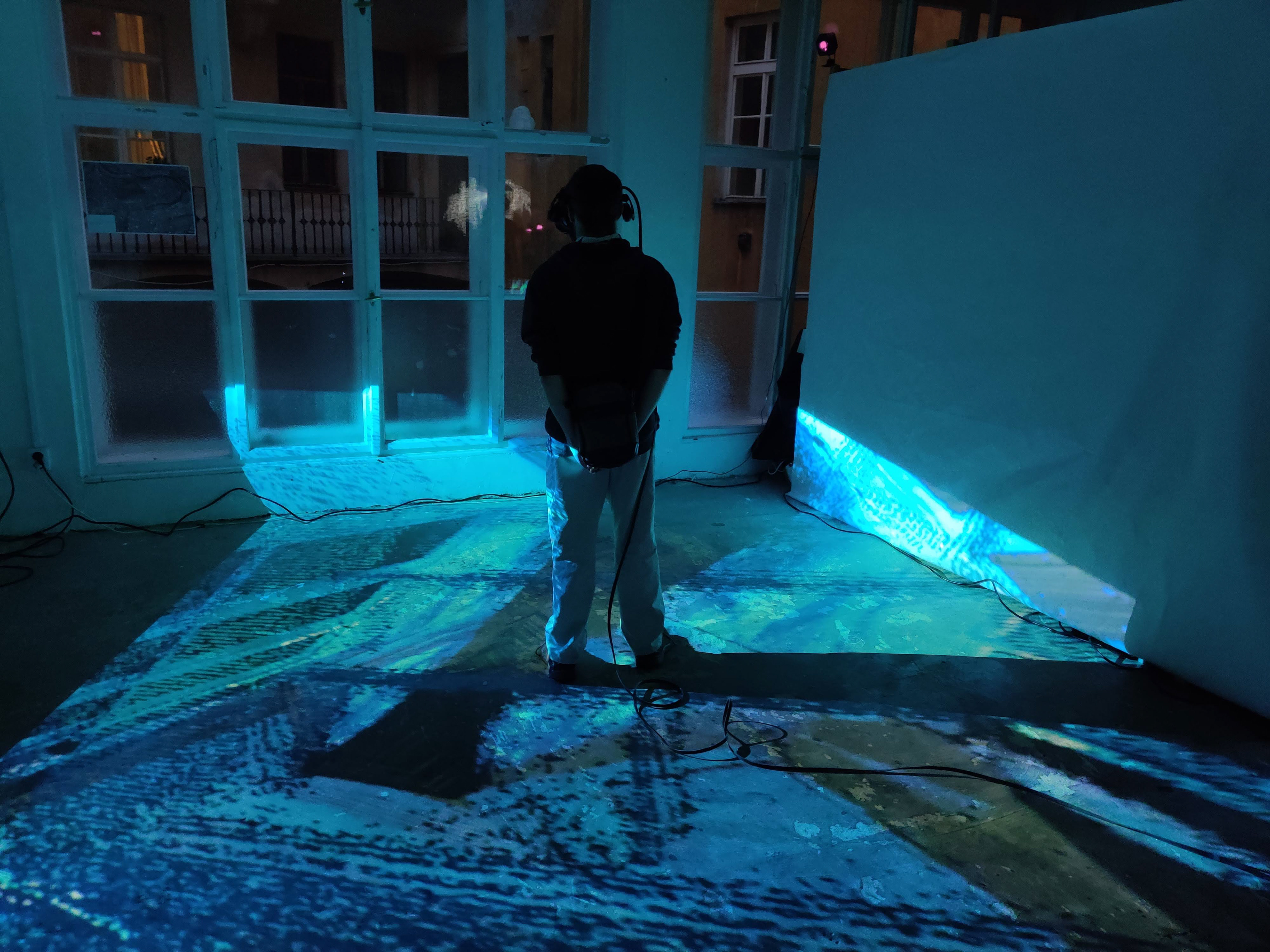

Hypercube VR at Cyberspace 2019 Workshop

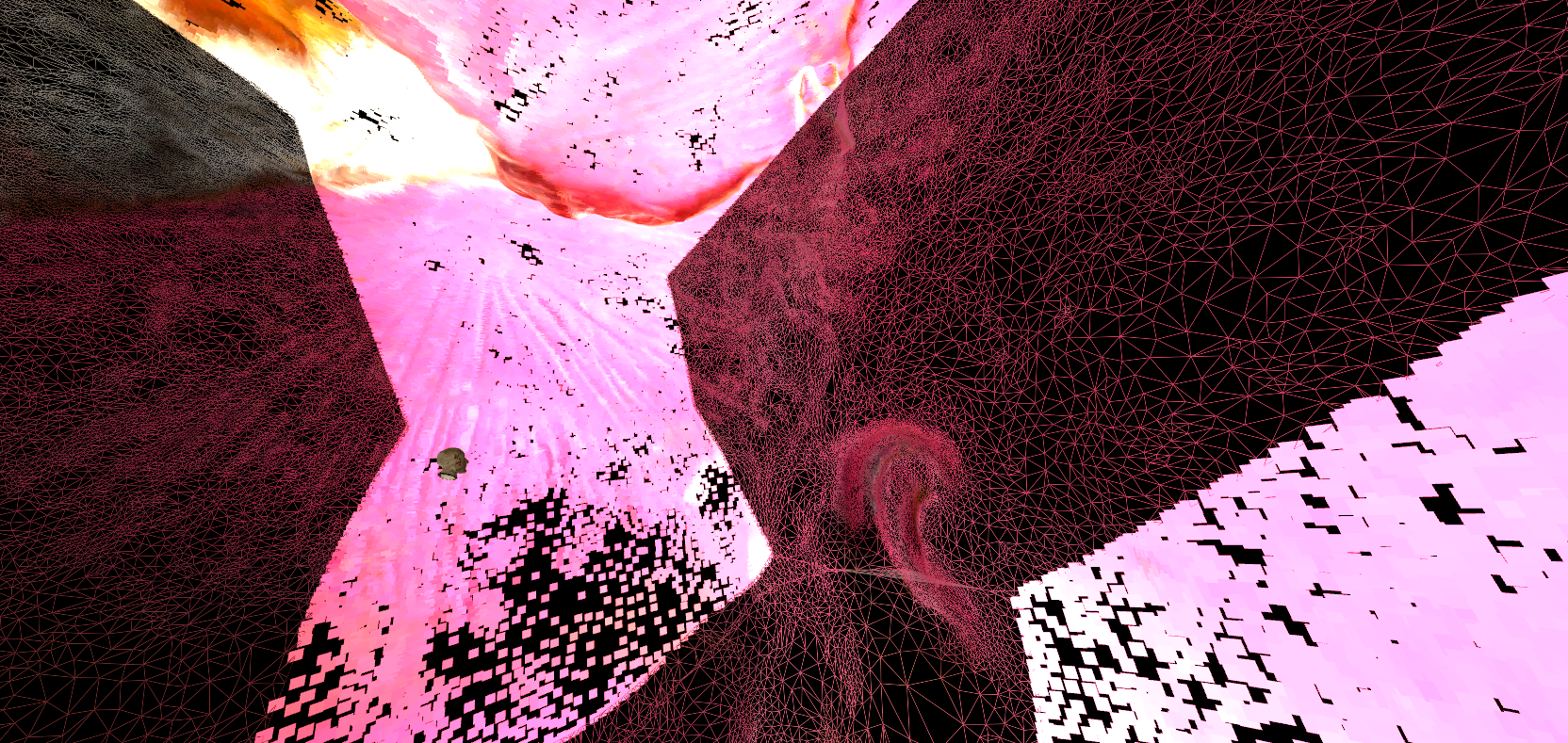

How can a 4D hypercube be visualized in VR? What experiences unfold when a real-time 3D scan of an environment is superimposed onto physical space? These questions were partially answered in an experiment created during a Cyberspace workshop at Prague City University in 2019. However, this exploration led to even more intriguing questions. Utilizing Unity 3D, HTC Vive VR, and a Kinect 3D depth camera, this immersive art installation prototype transported participants into a higher-dimensional space. Here, a 4D perspective was mapped onto the orientation and position of the VR headset.

In addition, an interactive real-time 3D scan of the installation space, interpreted as a particle system and calibrated to align with the physical space, was integrated. This setup not only allowed participants to perceive themselves and their surroundings in novel forms but also played with dimensional complexity. To enhance this experience further, a 2D webcam feed from the Kinect camera was mapped as a live texture onto the real 4D hypercube generated in the code.

Collaboration with Jiri Vyskocil

Puppet Head VR at Cyberspace 2018 Workshop

Created during a Cyberspace workshop at Prague City University in 2018, the Puppet Head was a VR experiment that explored the duality of physical and digital realities. The installation centered around a single object: a small pink puppet head. An HTC Tracker, a device enabling real-time 3D tracking of its position in a VR setup, was attached to its base. Additionally, a 3D scan of this puppet head was created and imported into the Unity 3D application.

The positioning of both the physical puppet head and its digital counterpart in VR was calibrated, ensuring synchronization. Participants could hold the object in their hands, freely manipulate it, and simultaneously see its 3D version in VR. Moreover, this tracked 3D model was duplicated and enlarged, effectively transforming it into an immersive environment. Thus, participants found themselves inside the head, manipulating their own surroundings.

3D Scan by Marketa Vychodilova

Electric Spring 2017: Interactive Media and VR Workshop

In 2017, Pascal Silondi and I were invited to lead a workshop at the University of Huddersfield (UK), focusing on interactive media and VR. Collaborating with Jorge Boehringer from the university, we engaged with a group of students over a week, aiming to create multiple installations for the Electric Spring festival.

My primary focus was on a VR installation that showcased a complex, dynamic composition. This included a monochrome real-time 3D scan from a Kinect sensor, sequences of single-sensor volumetric capture, dynamic spring-based generative structures, and a hand tracking and gesture navigation system. The installation represented an innovative blend of technology and creativity, tailored to provide an immersive experience for festival attendees.